Hacking Holodeck for VCF 9

Update: 20250630 – The Official Holodeck 9 has now been released, so this guide is only useful if you want to deploy from scratch manually.

Holodeck has become the standard for home lab VCF, and it’s easy to see why. It’s probably going to be a while before the official scripts are released, so here’s the workarounds I’ve used to deploy a nested VCF 9 platform in my lab. I’ve probably oversized this environment, so I’m planning to tune it a bit later.

This guide assumes you’re running the Advanced Holodeck mode (providing your own DNS/NTP/DHCP/BGP infrastructure instead of Basic Holodeck mode which deploys that all on the CloudBuilder VM).

Create 6x ESXi Hosts

24 vCPU / 128GB each (Automation requires a 24vCPU Nested VM – hence the large size).

4x NICs connected to your Holodeck Trunk PG.

Disk Layout – 40GB OS / 150GB vSAN Cache / 1TB vSAN Capacity (Thin Prov).

If you don’t plan to run Automation you can downsize the CPU count.

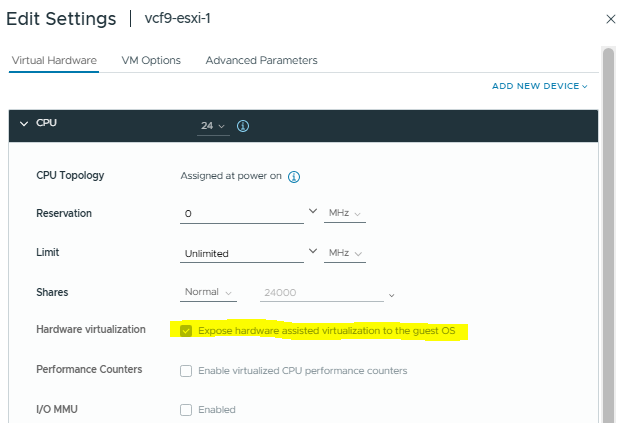

Ensure you have enabled “Hardware Virtualization”

Install ESXi 9 build as per the VCF BOM and complete the standard VCF pre-reqs (enable SSH/NTP/Regenerate Certs etc).

With VCF 9 the Aria architecture has changed, and components no-longer reside on an AVN segment.

I’ve created some new DNS entries so I can still bring up older VCF versions without having to roll those changes back, and I picked IP addresses that don’t conflict with Holodeck components.

Fleet Management (Replacement for SDDC Manager + Aria Suite Lifecycle)

fleet.vcf.sddc.lab – 10.0.0.162

Identity Broker (Replacement for vIDM)

broker.vcf.sddc.lab – 10.0.0.6

Aria Suite

vrops-9.vcf.sddc.lab – 10.0.0.160

vrops-cp-9.vcf.sddc.lab – 10.0.0.161

logs-9.vcf.sddc.lab – 10.0.0.7

vra-9.vcf.sddc.lab – 10.0.0.8

That’s it for now, the infrastructure will now be ready to deploy.

In the next post I’ll show how we use these hosts to deploy VCF9.